GreifbAR- Extended Reality in Surgical Training

Rais, Queisner (CC BY-NC-ND)

The aim of the project is to develop an intelligent learning system that enables surgical staff to learn complex gestures and the use of tools using a mixed reality application.

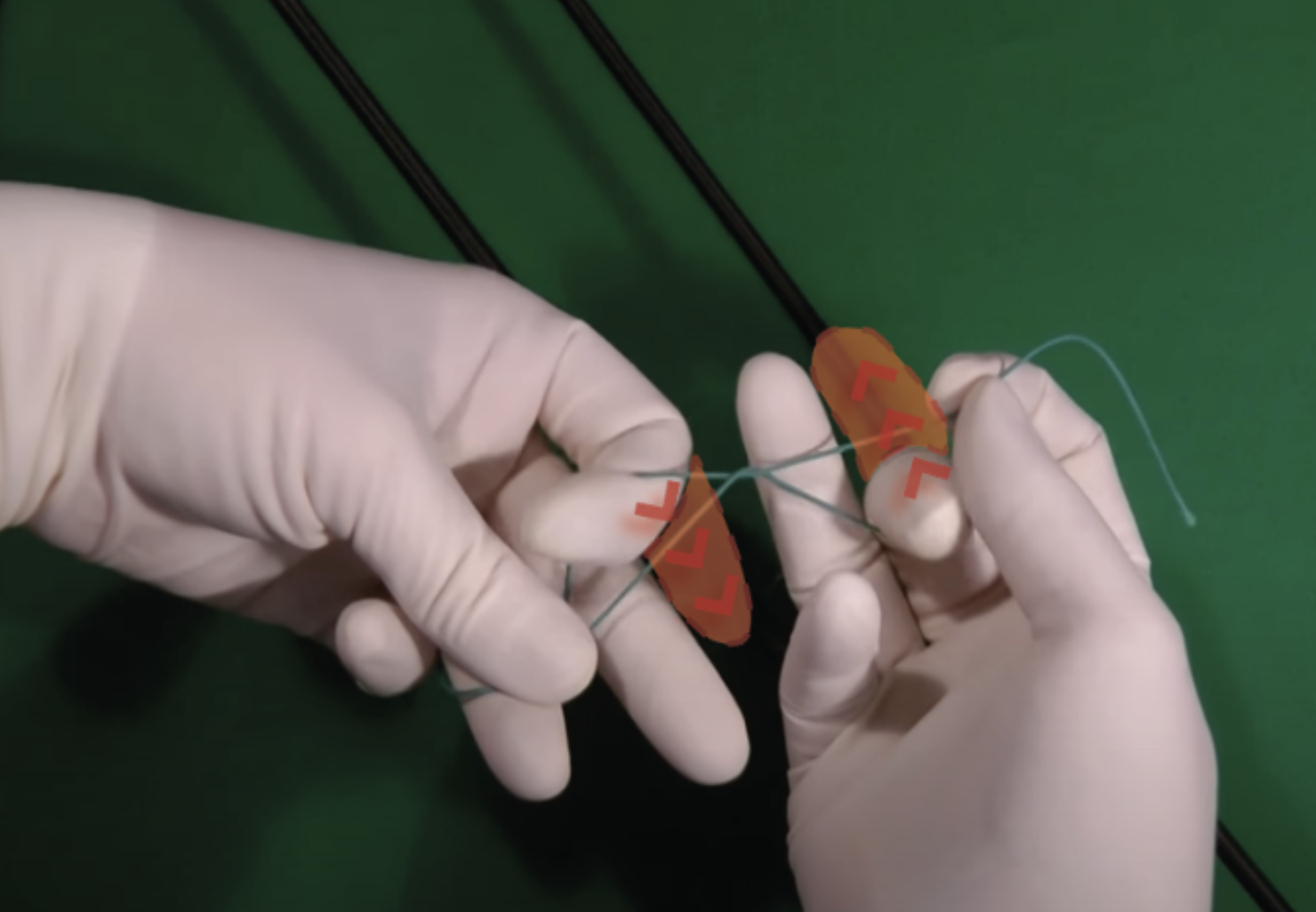

The objective of the project is to build an application that simulates surgical knot and suturing techniques. Based on new methods of machine learning for capturing hands and objects, the application should allow users to train fine manipulations of bodies and objects from a first person perspective in 4D space. While digital learning is usually based on observation and lacks interaction, the GreifbAR application will consist of virtual hands and other mixed reality elements that guide the user’s learning experience. The quality and accuracy of the user’s gestures and finger positions can be recorded and evaluated in real time.

The learning system is intended to be used for the teaching of surgical staff in the early career stages, where it seeks to improve the quality of education, reduce training costs and minimize risks and the use of animal experiments. The expansion to other surgical learning situations is planned.

Info

Funding 1,65 Mio. € (Federal Ministry of Education and Research)

Partners: German Research Center for Artificial Intelligence, Kaiserslautern, Chair of Psychology and Human-Machine Interaction, University of Passau, NMY Mixed-Reality Communication GmbH

Website: www.interaktive-technologien.de/projekte/greifbar

Sauer (CC BY-NC-ND)