The publication

"Redefining the Laparoscopic Spatial Sense: AI-based Intra- and Postoperative Measurement from Stereoimages“ has been accepted for the 38th AAAI Conference on Artificial Intelligence and is available via

https://doi.org/10.48550/arXiv.2311.09744. The publication is the result of a fruitful collaboration between

Karlsruhe Institute of Technology (KIT), Fraunhofer FIT, University of Bayreuth, and

Charité – Universitätsmedizin Berlin. Authors are Leopold Müller, Patrick Hemmer, Moritz Queisner, Igor Sauer, Simeon Allmendinger, Johannes Jakubik, Michael Vössing, and Niklas Kühl.

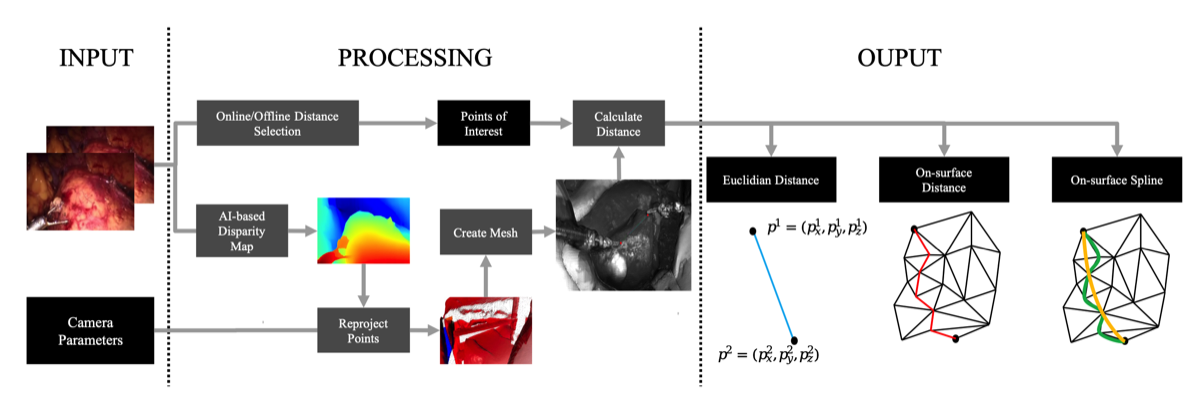

A significant challenge in image-guided surgery is the accurate measurement task of relevant structures such as vessel segments, resection margins, or bowel lengths. While this task is an essential component of many surgeries, it involves substantial human effort and is prone to inaccuracies. In this paper, we develop a novel human-AI-based method for laparoscopic measurements utilizing stereo vision that has been guided by practicing surgeons. Based on a holistic qualitative requirements analysis, this work proposes a comprehensive measurement method, which comprises state-of-the-art machine learning architectures, such as RAFT-Stereo and YOLOv8. The developed method is assessed in various realistic experimental evaluation environments. Our results outline the potential of our method achieving high accuracies in distance measurements with errors below 1 mm. Furthermore, on-surface measurements demonstrate robustness when applied in challenging environments with textureless regions. Overall, by addressing the inherent challenges of image-guided surgery, we lay the foundation for a more robust and accurate solution for intra- and postoperative measurements, enabling more precise, safe, and efficient surgical procedures.